In the world of intelligent energy systems, calibration isn’t just maintenance—it’s truth-telling. It’s how we make sure the numbers on the screen reflect the real energy inside the cell.

1. Why Calibration Matters

When designing a fuel gauge, many engineers assume that battery characteristics will stay constant over time. But like every living system, batteries age—and when they do, their internal chemistry drifts.

Aging causes changes in:

· Internal resistance

· Charge acceptance rate

· Voltage curve profile

If left uncalibrated, even sophisticated fuel gauges can become unreliable storytellers, showing 100% charge when actual capacity may have dropped to 70% or less.

For devices like medical instruments, UAVs, defense systems, and EVs, this is more than inconvenience—it’s a mission risk.

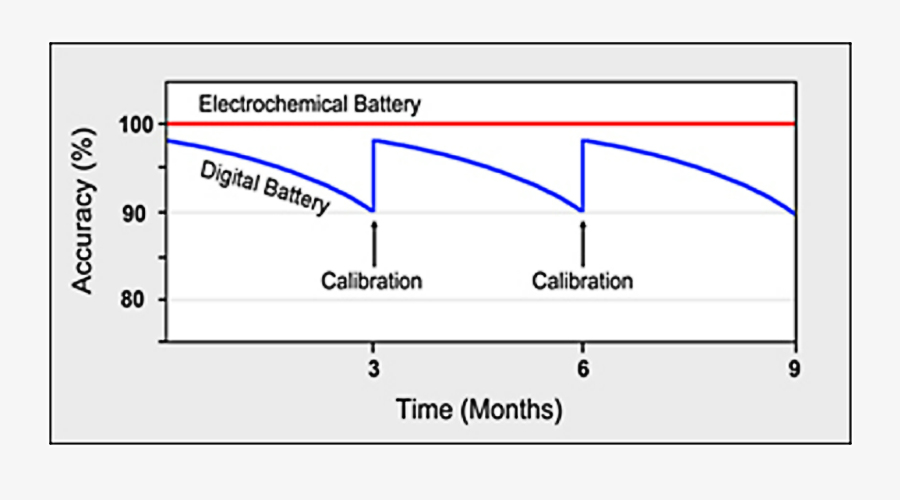

2. The Dual Reality: Chemical vs. Digital Battery

Modern smart batteries consist of two interconnected layers:

| Component | Function |

| Chemical Battery | The real, physical energy source. It determines the true runtime and capacity. |

| Digital Battery | The electronic monitoring system that interprets charge/discharge data and predicts SoC and SoH. |

Over time, the digital model drifts away from the real chemical state, creating a gap in accuracy. Calibration re-aligns them.

(Illustration: Calibration restores alignment between real capacity and digital prediction.)

3. Why Drift Happens

Even though smart batteries are designed to self-calibrate, they rarely get the chance to perform a perfect full discharge in real-world conditions.

Common causes of error accumulation include:

· Partial and irregular discharges

· Pulsed load profiles (e.g., radio bursts, sensors, or drone motors)

· Storage at high temperature, increasing self-discharge

· Interrupted recharge cycles

These irregularities cause the internal algorithms to lose their reference points, gradually skewing SoC accuracy.

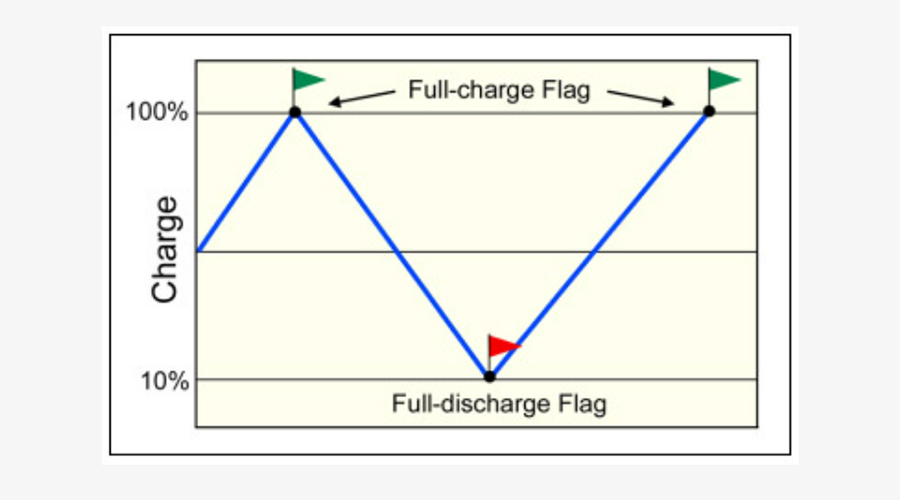

4. Manual Calibration: Restoring True Accuracy

To restore measurement precision, a manual calibration cycle is required.

Recommended Procedure:

- Discharge the battery fully within its host device until a “Low Battery” alert appears.

- Recharge the battery to 100% in one uninterrupted session.

- Allow the system to record full-discharge and full-charge flags.

This process re-establishes the linear relationship between the high and low endpoints—creating a refreshed baseline for SoC and SoH estimation.

| Step | Purpose |

| Full Discharge | Sets the “empty” anchor point |

| Full Charge | Sets the “full” anchor point |

| Post-Cycle Rest | Stabilizes readings and updates internal SoC model |

Calibration Frequency:

· Every 3 months or after ~40 partial cycles.

· Systems that naturally perform periodic deep cycles (like some EVs or drones) often self-calibrate automatically.

5. Using Battery Analyzers

For critical applications, battery analyzers offer laboratory-level calibration accuracy.

They measure chemical capacity directly by applying a controlled charge and discharge cycle.

This method provides more accurate data than the internal coulomb counter, which estimates capacity based on past events rather than live measurement.

| Measurement Type | Description | Accuracy Level |

| Coulomb Counting | Uses current integration | ±5–8% typical |

| Analyzer Discharge | Measures real delivered energy | ±1–2% |

| Hybrid SoH Algorithms | Combines impedance and coulomb data | ±3–5% |

6. What Happens If You Skip Calibration?

Skipping calibration doesn’t usually cause safety hazards, since chargers primarily obey the chemical battery’s limits.

However:

· The digital fuel gauge may show inaccurate runtime predictions.

· Capacity readings drift, creating confusion during maintenance or quality checks.

· Fleet management data for battery health may become unreliable.

For professional or industrial systems, periodic calibration ensures data integrity and predictable performance.

7. Impedance Tracking: The Self-Learning Alternative

Some advanced smart batteries integrate impedance tracking, a self-learning algorithm that continuously measures internal resistance to infer SoH and SoC.

Advantages:

· Reduces manual calibration needs

· Adapts to usage and aging automatically

However, after extensive use or environmental stress, even impedance-tracking batteries may require multiple calibration cycles to restore accuracy.

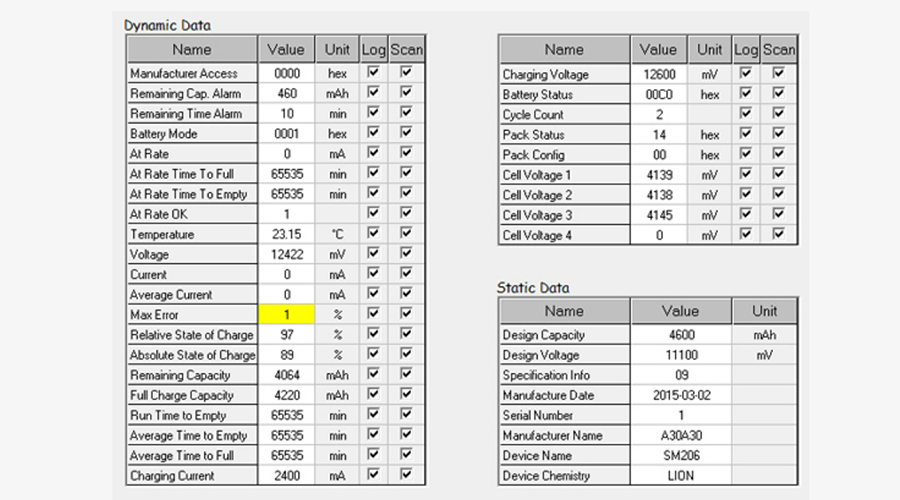

8. Understanding Max Error

The gap between digital and chemical performance is quantified as Max Error—the maximum deviation between measured and actual charge.

| Max Error (%) | Interpretation |

| ≤ 8% | Excellent alignment |

| 9–12% | Moderate drift (recalibration advised) |

| > 16% | Out of tolerance (battery may be unserviceable) |

Although there’s no global standard for acceptable Max Error thresholds, maintaining values below 10% is recommended for professional-grade energy systems.

9. Data Transparency via SMBus

Smart batteries using the SMBus protocol provide rich diagnostic data, including:

· Battery ID, model, serial number, and manufacturing date

· Cell-level voltage and temperature

· Estimated runtime

· Full Charge Capacity (FCC) and Coulomb Count

While this data is invaluable for engineers and service tools, it can overwhelm end-users.

As one might say, a nurse, a soldier, or a field technician only needs to know: “Will it last through my mission?”

At Himax, our systems balance data richness with clarity, enabling OEMs to see the analytics behind the scenes while users get simple, reliable runtime feedback.

Himax Perspective: Calibrating for Reliability

Calibration is not just about adjusting readings—it’s about sustaining trust between the battery, the device, and the human relying on it.

At Himax Battery, our smart energy platforms combine advanced fuel gauge ICs, coulomb counting, and impedance learning to deliver enduring precision.

We design systems that self-correct, communicate clearly, and maintain long-term accuracy under the most demanding industrial and defense conditions.

Because in power systems, precision isn’t optional—it’s survival.